The Silent Battleground: Information War and Active Measures

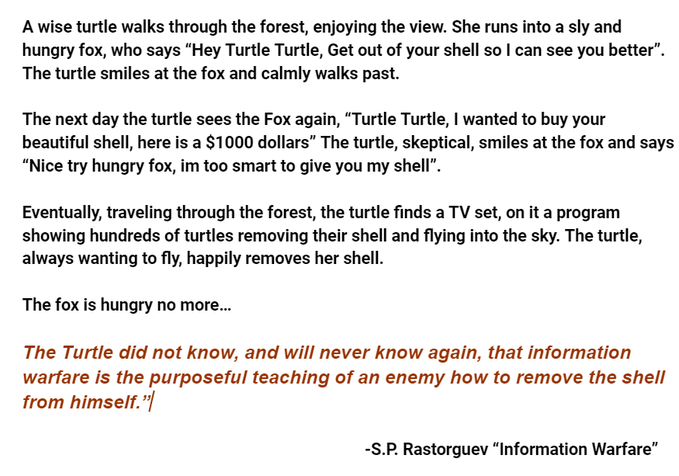

In the shadowy corridors of global politics, a war is being waged not with guns and bombs, but with words and information. This is the realm of information warfare, a conflict that transcends physical borders and penetrates the very psyche of nations. At the heart of this war are active measures, a term that harks back to the Cold War era, describing a suite of covert operations aimed at influencing events and public perception.

Active measures encompass a broad spectrum of tactics, from disinformation and propaganda to cyber attacks and political manipulation. These are the tools of modern statecraft, wielded by nations to assert dominance, sow discord among adversaries, and shape the global narrative to their advantage.

AI, Active Measures, media, and filter bubbles can be combined for a successful operation by a threat actor might involve the following steps:

- Data Collection: The threat actor collects vast amounts of data from various sources, including social media, forums, and other platforms.

- Profile Building: Using AI, they analyze this data to build detailed profiles of individuals and groups, identifying their preferences, biases, and beliefs.

- Content Creation: The threat actor then creates or curates content tailored to these profiles. This could include fake news articles, deepfake videos, or manipulated images that align with the target audience’s existing beliefs.

- Amplification: The content is disseminated through social media platforms, where algorithms (filter bubbles) ensure it reaches the intended audience. The AI can also generate fake social media accounts to like, share, and comment on the content, increasing its visibility.

- Troll Patrol: Bots and AI-driven accounts may be deployed to engage with users, defend the narrative against criticism, and attack opposing viewpoints to discredit them.

Feedback Loop: The threat actor monitors engagement and refines their strategies accordingly, creating a feedback loop that enhances the effectiveness of the campaign.

An example of this in action could be seen in political elections, where such tactics might be used to sway public opinion against a particular candidate or policy. By creating and amplifying content that resonates with certain voter segments, a threat actor can create a skewed perception of reality, influencing the outcome of an election.

Russia and China in Information Warfare:

- China’s Influence Regarding Ukraine: Following Russia’s lead, China has censored content related to the conflict on its domestic internet and used state media to echo Russia’s narrative. Evidence also suggests that China has spread disinformation about the conflict on platforms like WeChat, both domestically and possibly on global networks.

- Russian Operations in Sweden and the Balkans: Russia has allegedly created authentic-looking websites in Sweden to spread fake news. During elections in Ukraine and Bulgaria, and a naming referendum in Macedonia, Russian interference was evident through the widespread dissemination of fake news and disinformation via state and social media, aimed at suppressing or delegitimizing the vote.

- Digital Election Interference in the United States: Prior to the November 2018 midterm elections, Microsoft discovered that a unit linked to Russian military intelligence had created websites resembling those of the U.S. Senate and prominent Republican think tanks, attempting to trick visitors into revealing sensitive information.

These examples highlight how state actors strategically use social media and create echo chambers as part of broader information warfare campaigns to influence elections and political processes.

The digital age has amplified the potency of information warfare, turning social media platforms into battlegrounds where perceptions are molded and opinions weaponized. The spread of fake news, creation of echo chambers, and manipulation of algorithms all serve the strategic objectives of those engaged in this covert struggle.

As democracies grapple with this onslaught, the need for resilience has never been greater. This battle is not just for the integrity of information but for the very principles that underpin free societies. The information war is a testament to the power of knowledge and the imperative to safeguard it against those who would weaponize it.

A Path Forward:

To cultivate a more informed public, personalization algorithms must strike a balance by incorporating diverse viewpoints. This balance is crucial not only for individual enlightenment but also for the health of democratic processes. Social media platforms have a pivotal role to play. By prioritizing a variety of perspectives, they can help prevent the formation of echo chambers—insular environments where a single viewpoint is amplified, leaving little room for alternative voices.

Such a multifaceted approach to personalization would pose a significant challenge to threat actors seeking to manipulate public opinion. However, achieving this requires a fundamental reevaluation of how personalized content is generated and presented. Simply restricting access to news sites known for spreading propaganda is insufficient and potentially counterproductive. This strategy risks further restricting the flow of information and may inadvertently intensify biases by narrowing the scope of accessible content.

A more effective strategy involves enhancing the diversity of content within personalization algorithms, fostering a robust exchange of ideas, and supporting the democratic ideal of an informed citizenry.

Links:

Undermining Ukraine: How Russia widened its global information war in 2023 - Atlantic Council

Chapter 14 Active Measures: Russia’s Covert Global Reach (marshallcenter.org)

Going on the Offensive: A U.S. Strategy to Combat Russian Information Warfare (csis.org)

A Russian Strategist's Take On Information Warfare (darkreading.com)

Comments